Spark Read S3

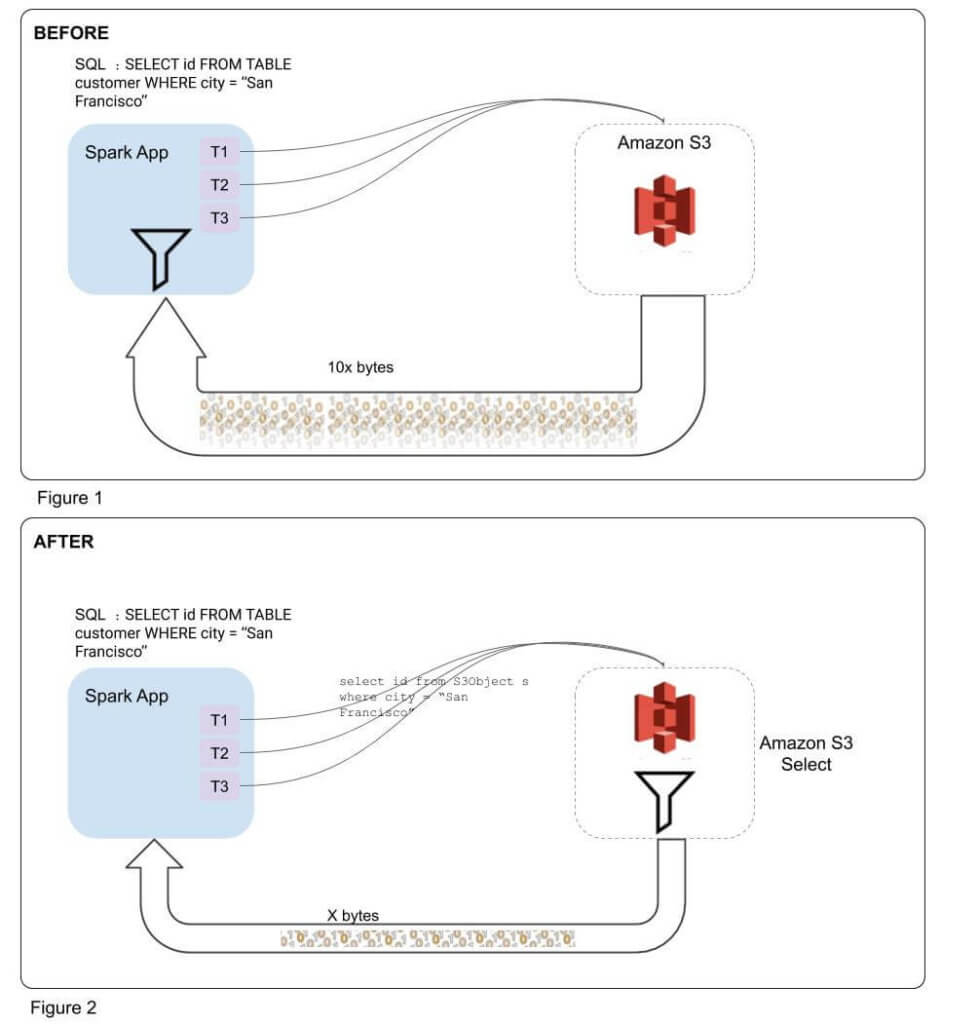

Spark Read S3 - S3 select allows applications to retrieve only a subset of data from an object. Web when spark is running in a cloud infrastructure, the credentials are usually automatically set up. We are going to create a corresponding glue data catalog table. Web you can set spark properties to configure a aws keys to access s3. Spark sql provides spark.read ().text (file_name) to read a file or directory of text files into a spark dataframe, and dataframe.write ().text (path) to write to a text file. Web with amazon emr release 5.17.0 and later, you can use s3 select with spark on amazon emr. You can grant users, service principals, and groups in your workspace access to read the secret scope. @surya shekhar chakraborty answer is what you need. When reading a text file, each line. Web the following examples demonstrate basic patterns of accessing data in s3 using spark.

Using spark.read.csv (path) or spark.read.format (csv).load (path) you can read a csv file from amazon s3 into a spark dataframe, thes method takes a file path to read as an argument. Web the following examples demonstrate basic patterns of accessing data in s3 using spark. Read parquet file from amazon s3. The examples show the setup steps, application code, and input and output files located in s3. In this project, we are going to upload a csv file into an s3 bucket either with automated python/shell scripts or manually. How do i create this regular expression pattern and read. While digging down this issue. S3 select allows applications to retrieve only a subset of data from an object. Web i have a bunch of files in s3 bucket with this pattern. Databricks recommends using secret scopes for storing all credentials.

Myfile_2018_(150).tab i would like to create a single spark dataframe by reading all these files. How do i create this regular expression pattern and read. Web the following examples demonstrate basic patterns of accessing data in s3 using spark. We are going to create a corresponding glue data catalog table. Web with amazon emr release 5.17.0 and later, you can use s3 select with spark on amazon emr. You can grant users, service principals, and groups in your workspace access to read the secret scope. By default read method considers header as a data record hence it reads. Web i have a bunch of files in s3 bucket with this pattern. Databricks recommends using secret scopes for storing all credentials. This protects the aws key while allowing users to access s3.

Spark Architecture Apache Spark Tutorial LearntoSpark

Web the following examples demonstrate basic patterns of accessing data in s3 using spark. While digging down this issue. @surya shekhar chakraborty answer is what you need. Web how should i load file on s3 using spark? Web in this spark tutorial, you will learn what is apache parquet, it’s advantages and how to read the parquet file from amazon.

Tecno Spark 3 Pro Review Raising the bar for Affordable midrange

We are going to create a corresponding glue data catalog table. Databricks recommends using secret scopes for storing all credentials. Read parquet file from amazon s3. Web how should i load file on s3 using spark? Web when spark is running in a cloud infrastructure, the credentials are usually automatically set up.

One Stop for all Spark Examples — Write & Read CSV file from S3 into

Featuring classes taught by spark. Web you can set spark properties to configure a aws keys to access s3. How do i create this regular expression pattern and read. Topics use s3 select with spark to improve query performance use the emrfs s3. Write dataframe in parquet file to amazon s3.

Spark에서 S3 데이터 읽어오기 내가 다시 보려고 만든 블로그

We are going to create a corresponding glue data catalog table. Web spark read csv file from s3 into dataframe. When reading a text file, each line. Using spark.read.csv (path) or spark.read.format (csv).load (path) you can read a csv file from amazon s3 into a spark dataframe, thes method takes a file path to read as an argument. @surya shekhar.

Spark SQL Architecture Sql, Spark, Apache spark

Myfile_2018_(150).tab i would like to create a single spark dataframe by reading all these files. Databricks recommends using secret scopes for storing all credentials. Web when spark is running in a cloud infrastructure, the credentials are usually automatically set up. We are going to create a corresponding glue data catalog table. Using spark.read.csv (path) or spark.read.format (csv).load (path) you can.

spark에서 aws s3 접근하기 MD+R

Web i have a bunch of files in s3 bucket with this pattern. Write dataframe in parquet file to amazon s3. @surya shekhar chakraborty answer is what you need. It looks more to be a problem of reading s3. How do i create this regular expression pattern and read.

Improving Apache Spark Performance with S3 Select Integration Qubole

Web spark read csv file from s3 into dataframe. @surya shekhar chakraborty answer is what you need. The examples show the setup steps, application code, and input and output files located in s3. You can grant users, service principals, and groups in your workspace access to read the secret scope. Read parquet file from amazon s3.

Read and write data in S3 with Spark Gigahex Open Source Data

Write dataframe in parquet file to amazon s3. @surya shekhar chakraborty answer is what you need. Web in this spark tutorial, you will learn what is apache parquet, it’s advantages and how to read the parquet file from amazon s3 bucket into dataframe and write dataframe in parquet file to amazon s3 bucket with scala example. The examples show the.

PySpark Tutorial24 How Spark read and writes the data on AWS S3

It looks more to be a problem of reading s3. Web with amazon emr release 5.17.0 and later, you can use s3 select with spark on amazon emr. Web in this spark tutorial, you will learn what is apache parquet, it’s advantages and how to read the parquet file from amazon s3 bucket into dataframe and write dataframe in parquet.

Spark Read Json From Amazon S3 Spark By {Examples}

Web spark read csv file from s3 into dataframe. Topics use s3 select with spark to improve query performance use the emrfs s3. How do i create this regular expression pattern and read. This protects the aws key while allowing users to access s3. It looks more to be a problem of reading s3.

We Are Going To Create A Corresponding Glue Data Catalog Table.

S3 select allows applications to retrieve only a subset of data from an object. Web with amazon emr release 5.17.0 and later, you can use s3 select with spark on amazon emr. Reading and writing text files from and to amazon s3 Web the following examples demonstrate basic patterns of accessing data in s3 using spark.

Databricks Recommends Using Secret Scopes For Storing All Credentials.

Web i have a bunch of files in s3 bucket with this pattern. Web when spark is running in a cloud infrastructure, the credentials are usually automatically set up. You can grant users, service principals, and groups in your workspace access to read the secret scope. Web spark read csv file from s3 into dataframe.

Web How Should I Load File On S3 Using Spark?

The examples show the setup steps, application code, and input and output files located in s3. Web you can set spark properties to configure a aws keys to access s3. When reading a text file, each line. Web 1 you only need a basepath when you're providing a list of specific files within that path.

Web In This Spark Tutorial, You Will Learn What Is Apache Parquet, It’s Advantages And How To Read The Parquet File From Amazon S3 Bucket Into Dataframe And Write Dataframe In Parquet File To Amazon S3 Bucket With Scala Example.

By default read method considers header as a data record hence it reads. @surya shekhar chakraborty answer is what you need. In this project, we are going to upload a csv file into an s3 bucket either with automated python/shell scripts or manually. Spark sql provides spark.read ().text (file_name) to read a file or directory of text files into a spark dataframe, and dataframe.write ().text (path) to write to a text file.